|

||||||||||||||||

|

||||||||||||||||

|

p r e v |

Combining the best aspects of robust unsupervised statistical latent "theme"

generation models of text with supervised annotation models for

better data organization and simultaneous summarization.

The advantage of using probabilistic models is that we do not have to worry about a metric that may or may not be suitable for algebraic manipulations on the data at hand. We rather encode our assumptions in the form of specific classes of probability distributions.  Something about me My resume : pdf (2 page) | pdf (longer)

Thank you notes (+) JJC helped me get introduced to topic models on text and images(+) An important hint from JBG helped me complete my journey! (+) Perhaps the most significant way in which my thesis is being shaped is due to MJB and DMB.  Datasets I Have Recently Used (+) Real life videos downloaded from the Web TRECVID (+) Newswire articles for summarization TAC

Guided Summarization track (This is a standard

dataset for evaluating system performance) (+) Open source data from kdnuggets (+) Multidomain sentiment dataset based on Amazon Reviews (+) Datasets from Yahoo!

Sandbox

|

|

Academic activities

(+) I am happy to declare University at Buffalo, the State University of New York to be my alma mater!

Important references (+) Important results on matrix algebra for statistics can be found in this Matrix Cookbook.(+) Jonathan Shewchuk's Painless Conjugate Gradient (PCG). ≈ 110 word summary from PCG: The intuition behind CG is easier to understand for a quadratic function f(x) as follows: In the case where the coefficient matrix A of x2 in f(x) with x ∈ RP is not diagonal, for e.g., if we flatten an f(x) with x ∈ R2 onto a two dimensional plane one latitute at a time, the rings or contours corresponding to the latitutes on the surface of f will not be axes aligned ellipses. CG transforms A to Atrans such that the latter constitutes a basis formed by a set of P orthogonal search directions obtained from searching for the optimum x∗. This new basis makes the contours of the convex f(xtrans) axes aligned so that minimization can happen in P steps. Next generation challenge: Can a machine automatically generate this summary from PCG? This is similar to making an artificial assistant who can study and sum up a quantum phenomenon saying "Everything that can happen does happen."

Quite a feat! Take a read at my uncle Anadish's story in Wikipedia and in his homepageDisclaimer

All views expressed in these subset of web pages are my own and does not

necessarily reflect the views of my department

or school.

|

n e x t |

||||||||||||

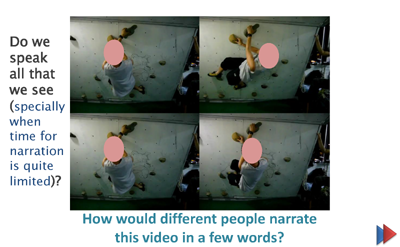

| How are we generating, organizing and summarizing content from the ground up (i.e. low level visual features)? More importantly, how are we organizing all the data with minimal supervision---knowing what to do when we do not know what to do? | ||||||||||||||||

|

Simultaneous Joint and Conditional Modeling of Documents Tagged from Two Perspectives - TagSquaredLDA

We applied unsupervised statistical tag-topic models

to different problems of interest.

The proposed tag-topic models are novel in: (i) the consideration of two different tag perspectives - a document level tag perspective that is relevant to the document as a whole and a word level tag perspective pertaining to each word in the document; (ii) the attribution of latent topics with word level tags and labeling latent topics with images in case of multimedia documents; and (iii) discovering the possible correspondence of the words to document level tags.

It was good to observe that supervised models,

such as those involving some sort of structured prediction, can be bettered

independently of unsupervised models while still aiding the latter for better document

organization and even providing document navigation through faceted

latent topics.

Latent Dirichlet Allocation in Slow Motion Sometime back, I had spent some time in drafing what goes through LDA's mind when it crunches the data of counts. Journey of parametric hierarchical multinomial generative mixed membership models such as LDA (Latent Dirichlet Allocation). |

Summarizing Multimedia into Text through Latent Topics

> We proposed a multimedia topic modeling framework which is suitable

for providing a basis for automatically discovering and translating

semantically related words obtained from textual metadata of multimedia documents to semantically related videos or

frames from videos. In addition we can also predict the text description of a test video within a known domain.

> It also turned out that simply predicting more and more relevant keywords (or creating sentences out of them) does not improve the relevancy of the predicted descriptions. Instead, selecting sentences from the training set in an intuitive way almost doubles the relevancy of the lingual descriptions. This kind of text mining seems a little counter-intuitive, however, there is an argument as follows: In comedy shows such as the Daily Show, a comedian uses exact sentences from a "training set" (humor inducing quotes) and then manipulates or augments them to increase relevancy of a joke as measured by the loudness of applause from an audience. The training set thus comprises of a recent set of quotes that induces laughter within the comedian's team and the test set comprises of a new audience each day and the task is to predict which of those quotes (followed by witty comments and illustrations) can induce the greatest laughter in the audience. |

|||||||||||||||

|

|

||||||||||||||||